Technology is a segment which is getting smarter every day as it tinkers with something new. When it comes to teaching a Robot to efficiently help humans, we sure have a long way to go. Google might have made a breakthrough here, using language modelling to help robots understand and reply to humans better. Google says that these advanced bits of help and steps have made robots remarkably better at the execution of given complex tasks.

“Do what I mean and not what I say” is the tagline Google is going with to describe the ongoing scenario. The virtual assistants we use in our daily lives – are Google Assistant, Siri, and Alexa. It’s a known fact that they are getting smarter and more efficient with every use. They can now speak in gender-neutral voices, and wait on hold during calls. Robotic science is evolving in vast steps.

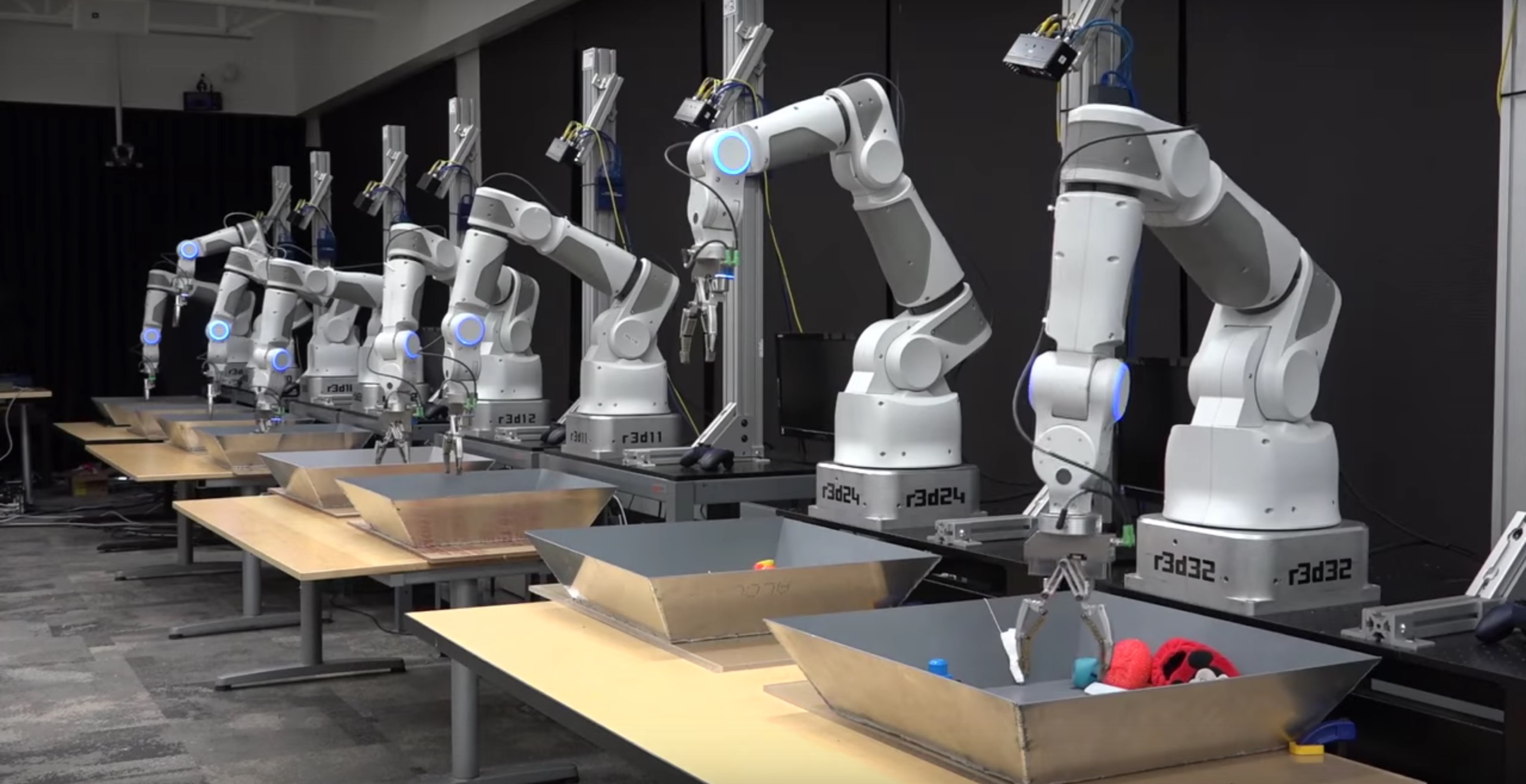

Teaching robots how to carry out repetitive tasks in a controlled environment isn’t a very easy task – especially where humans can’t venture. Robots have been created to communicate with humans, and carry out tasks for them with vocal commands. But this whole process is harder than it seems and has loopholes in them.

One of the biggest challenges faced by robotic scientists is making a robot fast, precise, and adaptive – like playing a ping pong match. One can be fast but not adaptive which is a problem in an industrial setting. Getting a perfect blend of all three is a hard task to achieve.

It’s said that ‘practice helps in skill development, but how does a robot practice, we are yet to find out. Leaving speed and precision, scientists are struggling with a way to really incorporate human language and robotic codes. The whole system has to go through major upgrades and changes before the robots fully understand human language to the T. If a robot is given an otherwise normal and simple sentence for a human, it will be a lot of information for the digital buddy. A simple sentence like “I’m a bit thirsty, could you please grab me a drink from the kitchen?”

Something that’s pretty normal to us might be a confusing moment for the robot, getting so many questions for a single task. A robot is after all a machine which lacks the understanding and logic of a human brain. If the sentence is taken literally, the robot might just answer with a “yes” because it is indeed capable of getting a drink! As a user, one is required to explicitly ask the robot, in brief sentences, what its next task is.

These are the kind of problems Google is facing during the development process. As users of Google Assistant or Siri, we have also come across such incidents where the smart machine might take us literally or not understand its task due to the complex nature of our sentences.

Google has been working towards robots actively processing and observing what a human wants, rather than being literal about it. Google is on its way to trying and incorporate some ways of human neurons into the codes and protocols of a binary brain, which one day might be more intelligent and effective than humans can ever be or even imagine.

For more such updates, keep reading techinnews